Table of Contents

How to compare algorithms?

Suppose, there is a number of algorithms solving a problem. How to compare which one is faster? One of the solutions is to run these algorithms multiple times and compare the average time used for each of the runs.

However, algorithms can be written in different programming languages or they can run on computers with different architectures. So results may be different. Actually, in this case, we compare the implementations of the algorithms and not the algorithmsthemselves.

Comparing algorithms requires abstracting out of programming language, operating system, hardware, programming skills and etc. The idea is to determine or estimate the time needed by an algorithm without referencing the implementation details.

The Time Complexity Function can be used to solve this task. The Time Complexity Function precisely shows how many operations are required to process the input data of an algorithm. It allows comparing algorithms without a reference to a particular implementation, hardware, coding skills and so on. The only important thing for the Time Complexity Function is a number of input elements (the size of the input data). The Time Complexity Function is denoted as T(n) where n is the size of the input data.

Counting the number of operations

Imagine that you have 44 pencils of different length in your hand. You need to sort them out from the longest one to the shortest one. What would you do?

You can find the longest pencils one by one and put them on a table in descending order until there are no more pencils in your hand. But how to find the longest pencil and how many operations are needed?

Here is the algorithm for 44 pencils:

- Choose a random pencil from pencils in your hand (11 operation). Name chosen pencil as a run candidate.

- Compare it with other pencils in your hand. If you find a longer pencil than a run candidate then use that longer one for further comparisons. That costs 4 – 1 = 34−1=3 operations.

Let’s name the procedure of finding the longest pencil LongestInHand. Finding the longest pencil from 4 pencils in your hand requires LongestInHand(4) = 1 + 3 = 4 operations. But how many operations are required to find the longest pencil if we have k pencils in hand? Choosing a random pencil always costs 11 operation. Comparison step costs k – 1 operations. Hence LongestInHand(k) = 1 + k – 1 = k operations where k is a number of pencils in your hand. We counted a number of operations for LongestInHand and hence we found the Time Complexity Function for that algorithm:

Let’s use LongestInHand to find the number of operations needed to sort pencils.

- Use LongestInHand(4) for 44 pencils in hand to find the longest pencil. That would cost 44 operations.

- Put the longest pencil on the table. 11 operation.

- Use LongestInHand(3) for 33 pencils in hand (one pencil is on the table now) to find the longest pencil. 33 operations.

- Put the longest pencil on the table on its place in descending order. 11 operation.

- Use LongestInHand(2) for 22 pencils in hand. 22 operations.

- Put the longest pencil on the table. $1$ operation.

- Use LongestInHand(1) for 11 pencil in hand. 11 operation.

- Put the last pencil on the table. 11 operation.

What about Time Complexity Functionfor that sorting algorithm?

We needed 14 operations to sort those pencils. But how many operations are required to sort nn pencils?

But what if you knew the secret? What if you simply took all pencils in your hand and hit them of the table by their ends? Now you would see that the longest pencil is higher than the others! The second in length is easy to identify when you remove the longest pencil and so on. What about Time Complexity Function now? One iteration of “secret” sorting algorithm requires only 2 operationsnow — take longest pencil (11 operation) and put it on the table in the right place (11 operation). So

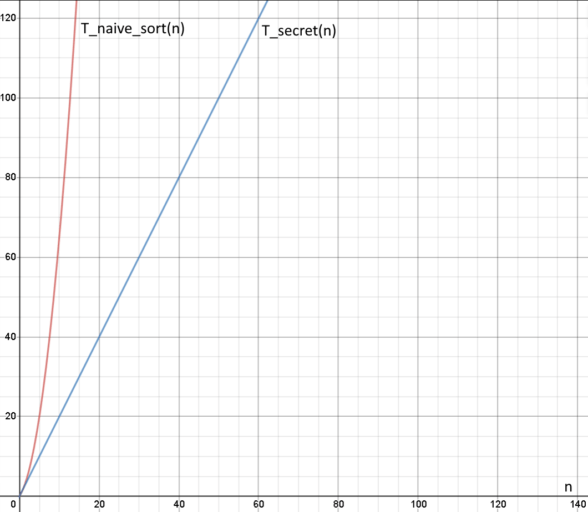

Let’s compare two algorithms now:

This graph shows the relationship between the input size nn (x-axis) and the number of operations T(n)T(n) the given algorithm performs (y-axis). This kind of graph is often used to represent time complexities of different algorithms and compare them because it is clear which algorithm performs faster.

Here we can see the “secret” algorithm for sorting the pencils is much faster than the naive one.

Summary

Time Complexity Function T(n) is used to determine how efficient the algorithm really is independent of its implementations. It depends only on nn, the size of the input data.

Time complexity is also useful for comparing different algorithms since we can determine the difference in their speed on the same data.

Calculating precise Time Complexity Function can be difficult so most of the time we use its asymptotic behavior represented by the Big O notation. You’ll get a chance to learn about it in another topic, don’t worry! Still, the main idea behind it is the notion of Time Complexity Function, so keep that in mind!